Verifiable Intelligence in AI: Unlocking a Reliable Future

Jan 9, 2025

10 min Read

Artificial Intelligence (AI) has rapidly evolved to become one of the most transformative forces of our times. From revolutionizing industries like finance and healthcare to enabling autonomous agents capable of solving complex problems, AI is reshaping how we interact with technology and make decisions. Yet, despite its immense potential, AI faces a fundamental challenge, reliability.

While modern AI models excel at generating plausible and creative outputs, their probabilistic nature often leads to critical errors, including subtle biases and outright hallucinations. These limitations not only hinder AI’s integration into high-stakes applications but also amplify concerns about misinformation, data trust, and ethical accountability. For AI to truly transform society, it must become more than powerful - it must become trustworthy, transparent, and verifiable.

This blog delves into the concept of verifiable intelligence in AI, exploring how innovative technologies and decentralized solutions are redefining the AI landscape. By addressing reliability and enabling independently verifiable outputs, these advancements promise to bridge the gap between AI’s theoretical potential and its practical applications, laying the foundation for a more transparent, accountable, and transformative AI ecosystem.

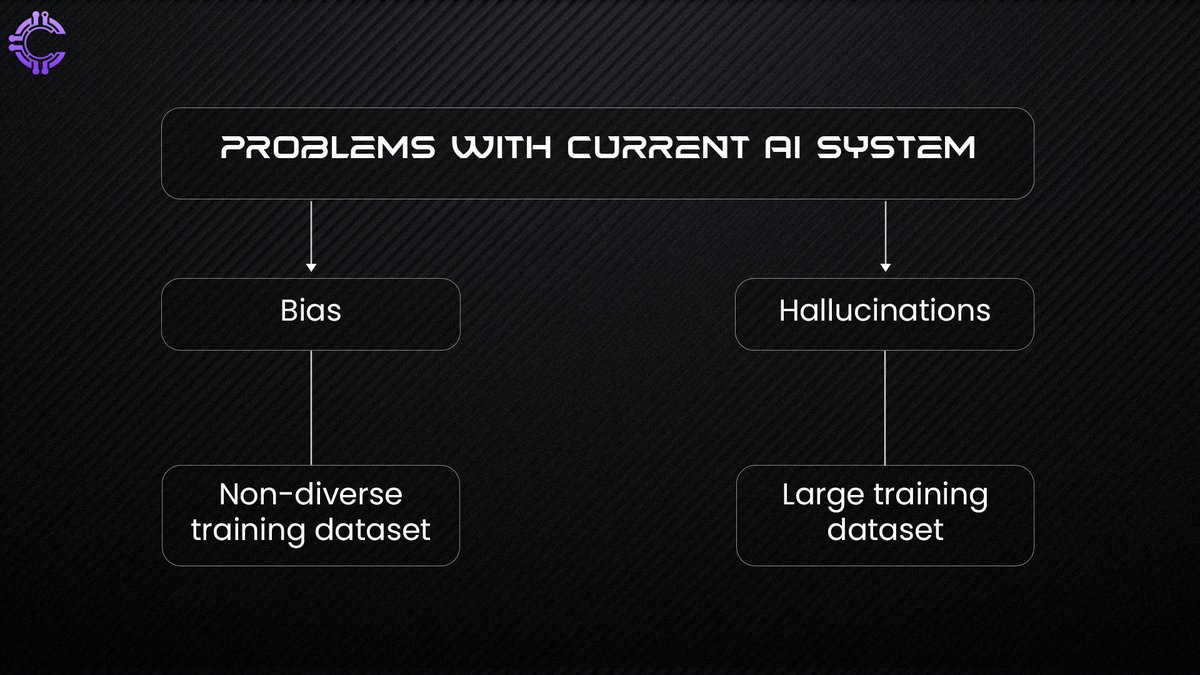

The Problem with Current AI Systems

AI systems, particularly those powered by large language models (LLMs) and generative neural networks, have demonstrated remarkable capabilities in producing text, images, and other synthetic content. However, they grapple with two critical reliability issues that limit their effectiveness and trustworthiness:

Hallucinations: AI systems often generate outputs that are fabricated, nonsensical, or factually incorrect. These hallucinations occur because AI models predict responses based on patterns in their training data rather than rooted factual knowledge. Without a mechanism to validate their outputs, these systems can confidently present errors as truth, posing serious risks in applications like medicine or law, where accuracy is paramount.

Bias: AI models can inherit, replicate, and even amplify biases from the datasets they are trained on. These biases might reflect societal prejudices, such as racial or gender stereotypes, or subtler forms like promoting one brand over another in enterprise solutions. Bias not only affects fairness but also damages trust in AI, particularly when it reinforces systemic inequalities or delivers skewed results in critical areas like hiring, lending, or justice.

The implications of these reliability flaws go beyond inconvenience. In safety-critical systems like autonomous vehicles or healthcare diagnostics, hallucinations and bias can lead to catastrophic errors, making the quest for verifiable and reliable AI systems a moral and technical imperative.

The Trade-off Between Precision and Accuracy

At the heart of AI reliability challenges is a precision-accuracy trade-off. Reducing hallucinations often requires curating narrow, high-quality datasets, which inadvertently introduces bias by excluding diverse perspectives. Conversely, expanding datasets to reduce bias increases variability, making outputs less consistent and prone to inaccuracies.

This trade-off reveals the fundamental limitation of single-model architectures, which struggle to balance precision (consistency of outputs) and accuracy (alignment with ground truth). The solution lies in collaborative AI systems, where multiple models contribute to filtering errors and balancing biases, achieving a synergy that single models cannot.

Why Verifiability Matters

The lack of verifiability in AI presents significant risks, especially in critical domains such as healthcare, autonomous vehicles, and finance. In these high-stakes environments, even minor errors can lead to catastrophic outcomes. Consider autonomous vehicles: these systems rely on AI to process vast amounts of data from sensors, cameras, and other inputs, making real-time decisions on navigation, obstacle avoidance, and safety protocols. A single error - whether from a faulty sensor, biased training data, or an AI hallucination - could result in a collision, potentially causing loss of life. Without a means to verify the AI’s decisions and outputs, we are left relying on systems that can neither explain nor prove the validity of their actions.

In healthcare, verifiability is equally crucial. AI-driven diagnostic tools are already being used to identify diseases, recommend treatments, and predict patient outcomes. However, an unverifiable AI system risks making misdiagnoses that could delay life-saving treatments. Ensuring that these systems are not only accurate but also independently verifiable is vital to building trust and preventing harm.

Deepfakes and Misinformation

Beyond physical safety, the digital world is grappling with a new wave of challenges fueled by misinformation and deepfakes. AI technologies have made it possible to create hyper-realistic synthetic content, from altered images and videos to entirely fabricated news stories. These tools are increasingly being weaponized to manipulate public opinion, harm reputations, and spread false information.

Verifiable AI offers a critical solution to this growing problem. By ensuring that outputs are traceable to reliable sources and validated through consensus mechanisms, verifiable AI can act as a countermeasure against misinformation. It establishes trust in digital ecosystems by creating transparent, auditable records of how outputs were generated. In a world where the line between reality and fabrication is increasingly blurred, verifiability is not just a safeguard, it’s a necessity.

##Three Pillars of Verifiability in AI##

!{img}(https://pbs.twimg.com/media/Gg3agciaMAML4Ey?format=jpg&name=medium)

Information Provenance: Understanding the origins of the data or knowledge used by an AI system. This includes identifying the source, timestamp, and context of the information.

Information Integrity: Ensuring that the data has not been tampered with or altered. This can be achieved using cryptographic methods and decentralized consensus mechanisms.

Incentivizing Quality Contributions: Building systems that reward the creation and sharing of high-quality, trustworthy data. This is essential in an age where synthetic, AI-generated content is poised to dominate.

The Road to Verifiable AI

Achieving verifiable AI requires a multi-pronged approach involving decentralized consensus mechanisms, cryptographic validation, and incentives for quality knowledge creation. Let’s explore these in more detail:

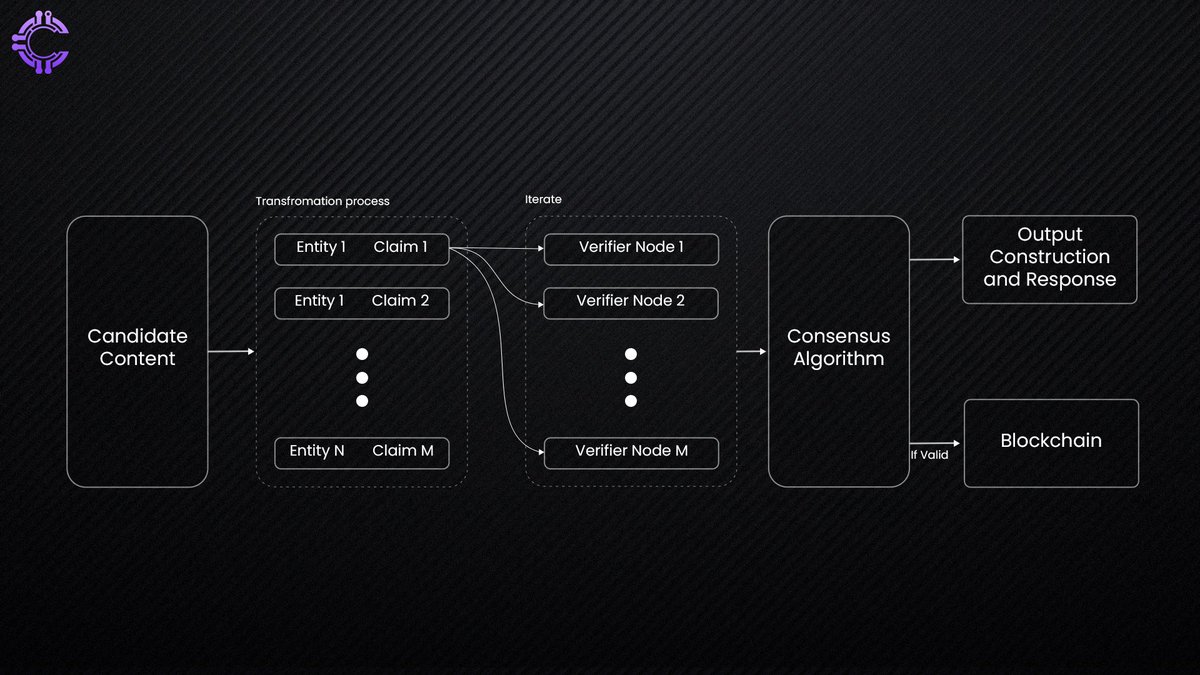

1. Decentralized Consensus for Verification

Reliability issues stem from the limitations of individual models. However, collective intelligence can address these challenges. By leveraging decentralized networks, multiple AI models can collaborate to validate outputs through consensus.

In a decentralized AI network, outputs are transformed into verifiable claims that multiple nodes independently assess. These nodes are incentivized to perform honest validation through mechanisms like hybrid Proof-of-Work (PoW) and Proof-of-Stake (PoS). This approach ensures that the system as a whole produces more accurate and less biased results than any single model could achieve.

2. Cryptographic Validation

Cryptographic methods ensure the integrity of the data used by AI systems. By employing verifiable ledgers, AI outputs can be traced back to their source, guaranteeing that the underlying data remains untampered. This transparency is particularly vital in applications involving sensitive or regulated information.

3. Incentivizing High-Quality Knowledge

The future of AI depends on access to diverse, high-quality data. However, the growing prevalence of synthetic, AI-generated content risks degrading the quality of available training data. Verifiable systems incentivize the creation and sharing of authentic, real-world data, ensuring that AI systems continue to improve without succumbing to “model collapse.”

The Role of Decentralized Networks

Let’s explore 2 major projects working upo the idea of verifiable and reliable intelligence, Mira.network and Space and Time, demonstrate how decentralized systems can revolutionize AI verifiability.

Mira.network🔗 is reshaping how we trust AI by turning outputs into independently verifiable claims. Using a decentralized consensus-driven framework, Mira allows multiple AI models and network nodes to validate results collaboratively. This approach not only minimizes errors but also enhances transparency, making AI systems more reliable for high-stakes applications.

Instead of relying on a single AI model, Mira leverages multiple models to cross-verify outputs. This collective validation significantly reduces errors and ensures accuracy and rewards to the node operators for honest verifications, creates a trustworthy and self-sustaining network.

It aims to develop advanced synthetic models capable of generating consistent, error-free outputs with minimal human intervention to act as a reliable oracle, providing verified data to power automation in blockchain ecosystems.

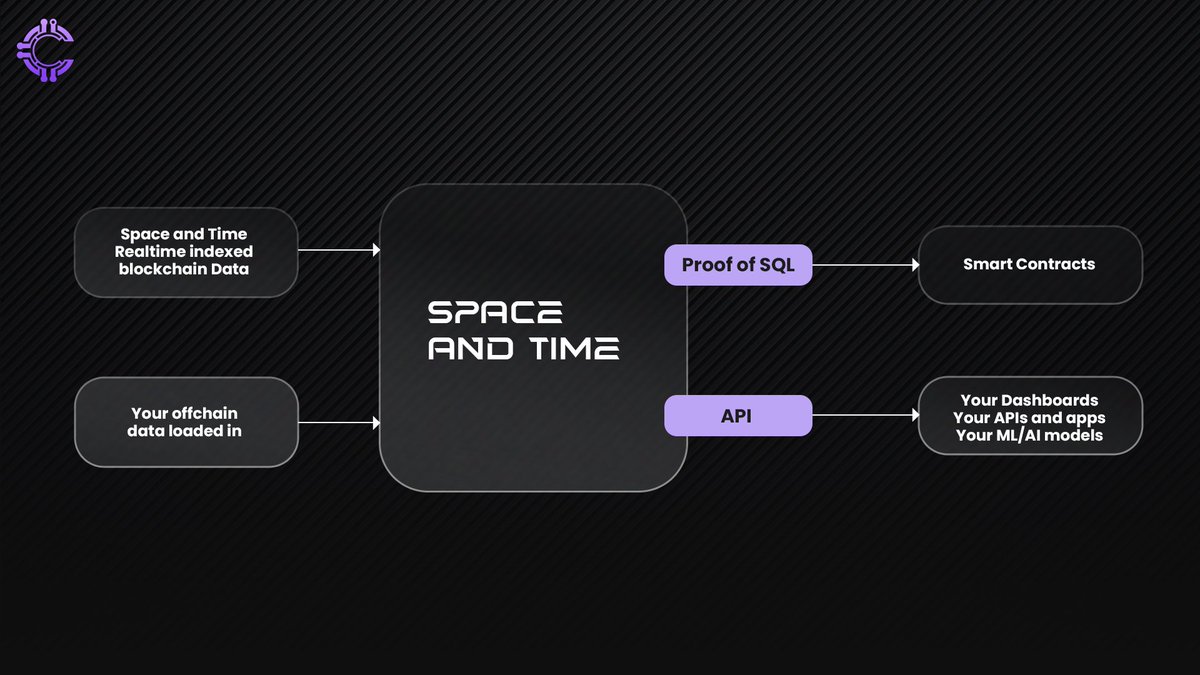

Space and Time: A Verifiable Database

While Mira.network focuses on outputs, Space and Time ensures the integrity of data itself.Space and Time provides a decentralized ledger that ensures data integrity. Its database maintains a transparent and auditable history of all stored information, making it ideal for AI applications requiring traceability and reliability. By combining cryptographic validation with decentralized storage, Space and Time promotes trust and transparency in AI systems.

Real-World Applications:

Fighting Misinformation: Ensures AI systems can trace deepfakes and false claims back to their origins.

Trusted Decisions: Provides organizations with verifiable, high-integrity data for critical decision-making.

Applications of Verifiable Intelligence

1. Autonomous Systems

For AI to operate autonomously in high-stakes environments, such as healthcare or transportation, verifiability is non-negotiable. Autonomous vehicles, for instance, require AI systems that can reliably process sensory data, make accurate decisions, and provide verifiable reasoning for their actions.

2. Combating Misinformation

In the digital age, distinguishing fact from fiction is increasingly challenging. Verifiable AI can address this by ensuring that outputs are backed by traceable and auditable sources. This capability is crucial for maintaining trust in media, education, and public discourse.

3. Smart Contracts and Oracles

In blockchain ecosystems, smart contracts rely on external data (oracles) to execute agreements. Verifiable AI systems, like those enabled by Mira.network, ensure that these oracles provide accurate and tamper-proof data, enhancing the reliability of decentralized applications.

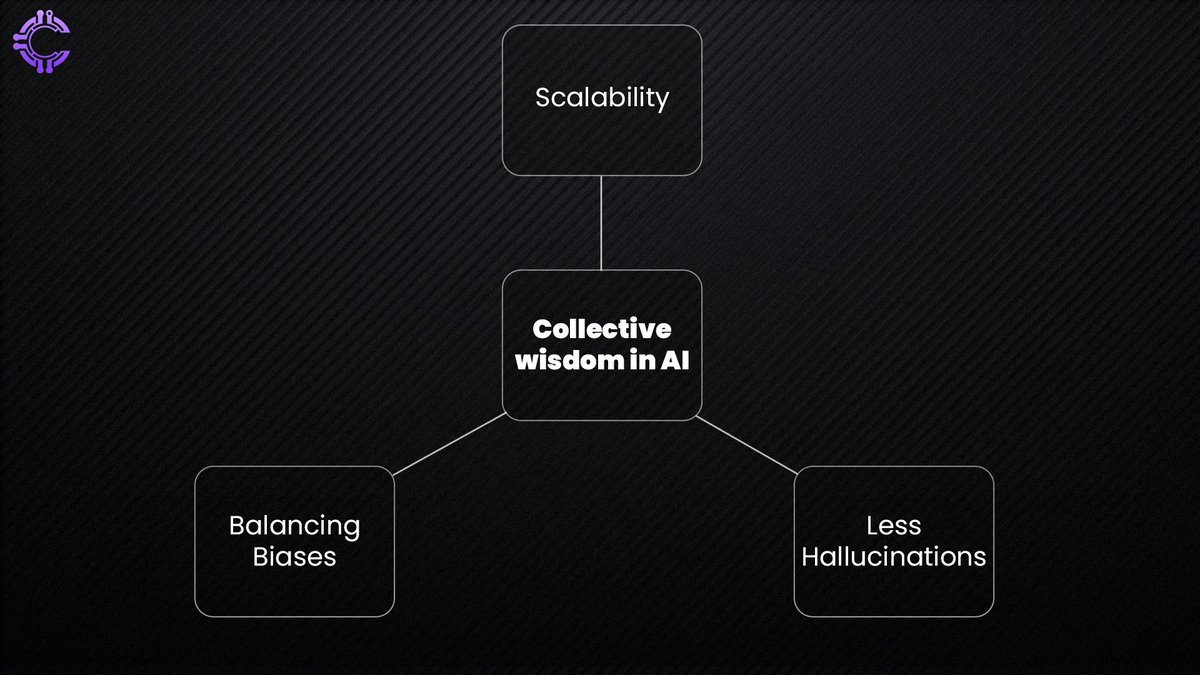

The Role of Collective Wisdom in AI

No single AI model can achieve perfect reliability. However, by combining the strengths of multiple models, decentralized networks can minimize errors and biases.

Reducing Hallucinations: By aggregating outputs from diverse models, decentralized networks can filter out inconsistencies and improve precision.

Balancing Biases: A diverse set of models can counteract individual biases, leading to more balanced and accurate outcomes.

Scalability: Decentralized networks can scale horizontally, enabling them to handle diverse, real-world scenarios more effectively than any single model.

Verified Intelligence = Reliable and Trustless AI

A reliable AI unlocks an entirely new realm of high-value use cases, transforming industries and enabling trustless operations without the need for constant human supervision. The current limitations of AI—hallucinations, bias, and lack of verifiability—have restricted its deployment to tasks where human oversight can catch errors. But when AI achieves reliability through verifiable intelligence, it becomes capable of handling critical and autonomous operations at scale.

Imagine autonomous vehicles that can independently navigate complex traffic scenarios with provable safety guarantees or AI-driven medical systems that provide diagnoses backed by transparent, error-free reasoning. Reliable AI enables such scenarios, offering transformative benefits in healthcare, transportation, and beyond. Moreover, it allows for the automation of high-stakes decisions, such as supply chain optimization, legal arbitration, and even environmental monitoring, all without the risk of unchecked errors.

This reliability also fosters trustless operations, where stakeholders can depend on the system’s outputs without needing to validate them manually. Decentralized AI networks and verifiable databases further enhance this trust by providing transparent, auditable systems. Reliable AI not only expands the scope of what machines can do but also lays the foundation for a future where automation works seamlessly, accurately, and without bias in critical areas of society.

A Vision for the Future

Imagine a world where autonomous systems, ranging from self-driving cars to AI-driven healthcare diagnostics—operate with near-perfect reliability. In this world, users can trust that every decision made by an AI system is based on accurate, verifiable information. Decentralized AI networks and verifiable databases will be the foundation of this vision, ensuring that AI fulfills its transformative potential without compromising on safety, trust, or accountability.

Conclusion: Building a Trustworthy AI Future

The future of AI hinges on its ability to be not only powerful but also trustworthy. Verifiable intelligence represents a critical evolution in addressing AI’s most pressing challenges - hallucinations, biases, and lack of transparency. By integrating decentralized consensus mechanisms, cryptographic validation, and incentives for high-quality data, we can create systems that don’t just deliver outputs but prove their validity.

This transformation is more than technical, it is foundational. Reliable AI has the potential to revolutionize industries, from healthcare and transportation to finance and public policy. Imagine autonomous systems that operate with unwavering accuracy, or AI-driven applications that combat misinformation with traceable and auditable insights. These advancements go beyond convenience, offering societal benefits that could reshape trust in technology itself.

The path to verifiable AI is not without challenges, but its promise is undeniable. In a world increasingly reliant on intelligent systems, we must prioritize reliability to unlock AI’s full potential. Verifiable intelligence is not just a milestone - it’s the foundation of a future where AI empowers humanity with trust, transparency, and transformative impact. Let us work toward this future, where every decision made by AI is not only powerful but provably right.

About Cluster Protocol

Cluster Protocol is the co-ordination layer for AI agents, a carnot engine fueling the AI economy making sure the AI developers are monetized for their AI models and users get an unified seamless experience to build that next AI app/ agent within a virtual disposable environment facilitating the creation of modular, self-evolving AI agents.

Cluster Protocol also supports decentralized datasets and collaborative model training environments, which reduce the barriers to AI development and democratize access to computational resources. We believe in the power of templatization to streamline AI development.

Cluster Protocol offers a wide range of pre-built AI templates, allowing users to quickly create and customize AI solutions for their specific needs. Our intuitive infrastructure empowers users to create AI-powered applications without requiring deep technical expertise.

Cluster Protocol provides the necessary infrastructure for creating intelligent agentic workflows that can autonomously perform actions based on predefined rules and real-time data. Additionally, individuals can leverage our platform to automate their daily tasks, saving time and effort.

🌐 Cluster Protocol’s Official Links: