From Sci-Fi to Silicon Valley: How Decentralized Personal AI Could Birth Your Personal Doraemon

Nov 28, 2024

14 min Read

####!{img}(https://cdn-images-1.medium.com/max/1600/1*TWBf6u_3e7OloWCqBXRTaQ.png)

The dream of personalized AI companions, much like the beloved character Doraemon, has intrigued humanity for generations. As we step into the 21st century, our quest for automation- an age-old desire to simplify life- has reached new heights. Every technological breakthrough, from the wheel to electricity, has aimed to ease human effort, and now we find ourselves on the cusp of a future where intelligent assistants can cater to our every need.

Imagine a world where personalized AI not only handles mundane tasks but also offers companionship and emotional support. This vision, rooted in science fiction and animated characters, is becoming increasingly attainable as advancements in artificial intelligence accelerate. Tech leaders are unveiling AI doubles that exhibit impressive cognitive abilities, bringing us closer to the dream of having our own digital helpers.

However, this journey is not without its challenges. Ethical concerns regarding privacy and security, along with the potential for dependency on technology, raise critical questions. Moreover, technological barriers must be addressed to create truly autonomous and reliable AI companions.

In this blog, we will explore how decentralized technologies like Web3 are pivotal in shaping personalized AI experiences. By leveraging blockchain and AI integration, we can create customizable companions that adapt to individual user needs while ensuring data privacy and security. Join us as we delve into the exciting possibilities that lie ahead in the realm of personalized AI and discover how Web3 could revolutionize our interactions with technology.

On the ladder of Personalized AI the first has been taken with the advent of Digital doubles - Ai agents trained to mimic the person on whose personal data the model is trained upon.

The Rise of Digital Doubles

Much like the character Doraemon, Ai assistants are becoming increasingly tangible as tech leaders unveil innovative AI doubles. This evolution has roots in early programs like ELIZA, which laid the groundwork for today’s sophisticated AI systems. A recent viral interaction featuring LinkedIn’s CEO showcases the growing public interest in digital doubles, reflecting our desire for ideal helpers in daily life.

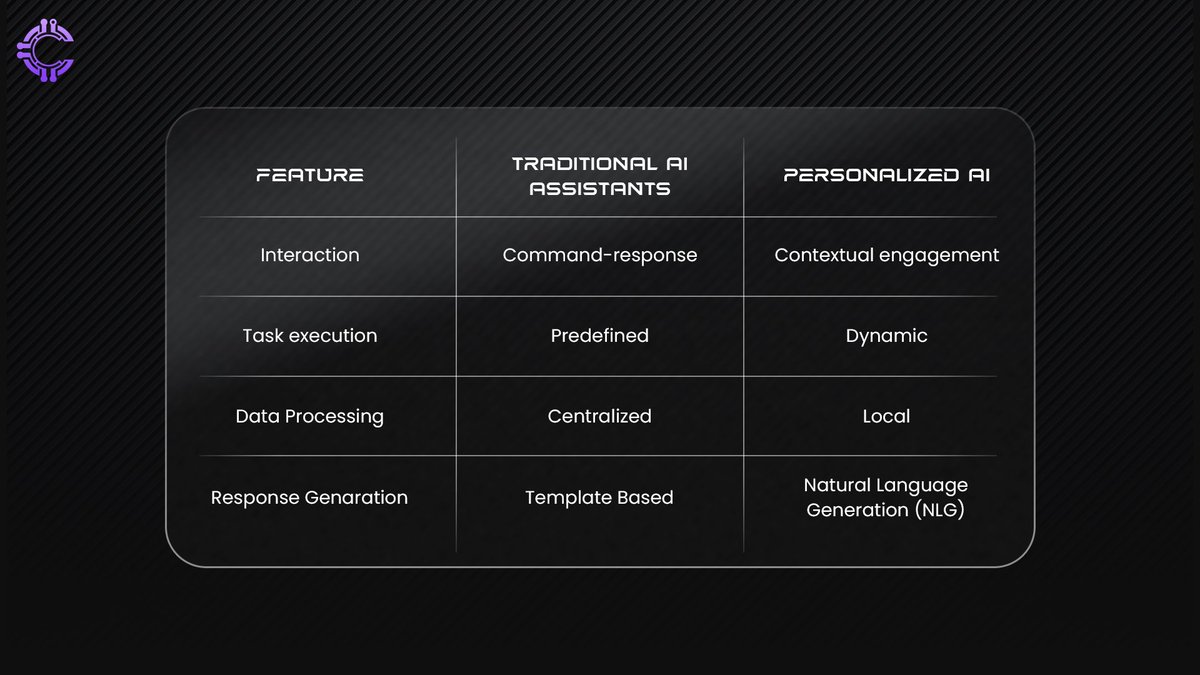

As we move toward a decentralized future, these personal AI agents promise to enhance our lives by automating tasks and providing tailored assistance. Unlike traditional AI assistants that respond to specific commands, personalized AI can learn from interactions and adapt to individual preferences, creating a more intuitive user experience. Tech giants like Microsoft and OpenAI are leading this charge, rapidly advancing AI capabilities that now double every six months.

The key difference between traditional AI assistants and personalized AI lies in their functionality and autonomy. While traditional assistants perform predefined tasks based on user input, personalized AI can proactively engage and adapt to users’ needs.

Edge AI powering personalized agents - Compute

A key difference between traditional assistants and personalized ones lies in how they handle data. Traditional AI often relies on centralized servers, which can lead to frustrating delays - high latency that hampers performance.

Moreover, trusting personal data to a centralized server poses risks: a single point of failure can result in data breaches, exposing private information.

The solution? Edge computing. By processing data locally, edge computing reduces latency and enhances performance. It keeps sensitive information closer to the user, improving security and reliability.

With edge computing, personalized AI can respond instantly and securely, creating smoother, more efficient user experiences. This shift not only empowers users but also ensures their data remains safe in an increasingly digital world. Embracing this technology means unlocking the true potential of personalized AI!

How does this work?

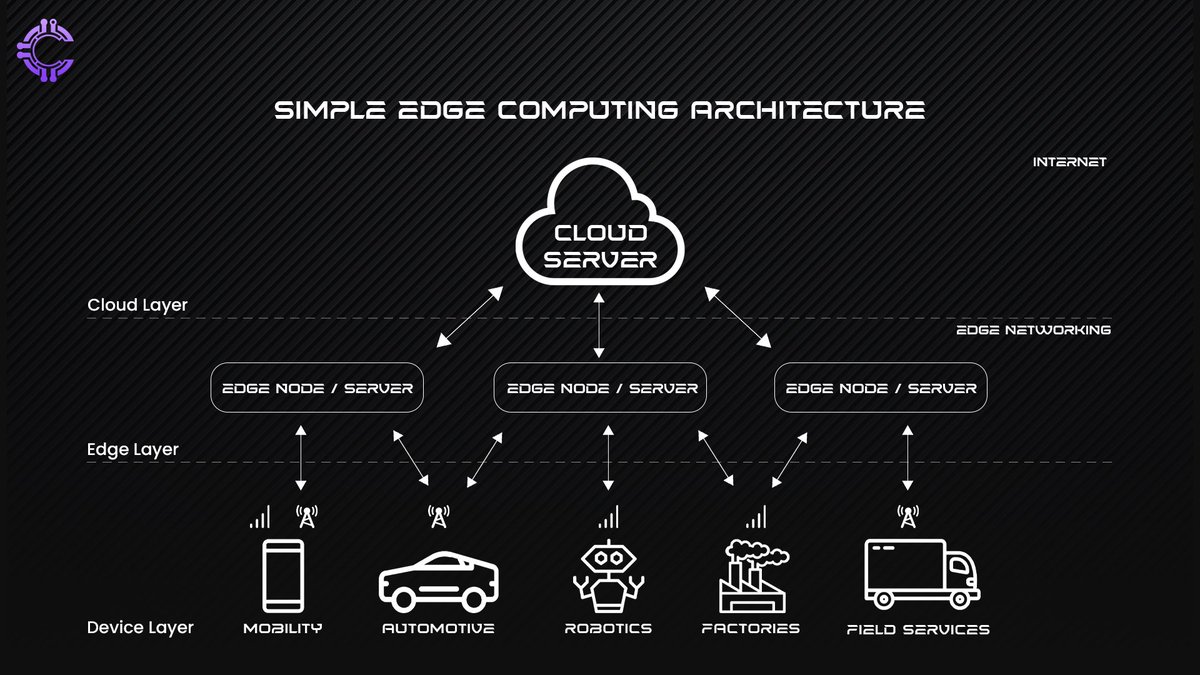

Edge AI operates through a three-layer architecture that enhances data processing and decision-making capabilities by bringing intelligence closer to the data source.

Embedded Computing Layer: IoT devices collect data from their environment, performing initial processing for real-time insights.

Edge Computing Layer: This layer filters and analyzes data locally, running AI inference models to reduce latency and bandwidth usage, enabling quick decision-making.

Cloud Computing Layer: It serves as a centralized hub for extensive data processing and model training, collecting insights from edge devices and creating feedback loops for continuous improvement.

Edge devices collect data, process it locally to filter out noise, send relevant information to the cloud for deeper analysis, and receive insights back to enhance future operations.

How does Personalized AI interact?

Contextual engagement is like having a personal assistant who understands not just what you say but also where you are, what time it is, and even your mood. It considers factors such as your location, the time of day, and your previous interactions. For instance, if you're using a restaurant app at 6 PM on a Friday night, it might suggest nearby dining options that suit your taste—because who wants to eat alone on a weekend?

This approach works wonders because personalized AI analyzes vast amounts of data in real-time. Picture it as a detective piecing together clues from your past behaviors to predict what you might need next. This ability to adapt dynamically enhances user satisfaction and drives higher conversion rates, ensuring that you receive offers and content that feel timely and relevant.

How Does It Work?

The magic of contextual engagement lies in its components:

Context Awareness: The AI understands the situation it's in by gathering details about the user and their environment.

Data Integration: It combines information from various sources, like social media or sensors, to get a complete picture.

Real-Time Processing: The AI constantly analyzes what's happening around you, allowing it to adjust its responses instantly.

Personalization: By using your preferences and past behaviors, it crafts responses that resonate with you.

Think of it as having a conversation where your AI friend not only remembers your favorite pizza topping but also knows you’re trying to eat healthier today- so it suggests a delicious salad instead!

Natural Language Processing (NLP): The Conversational Glue

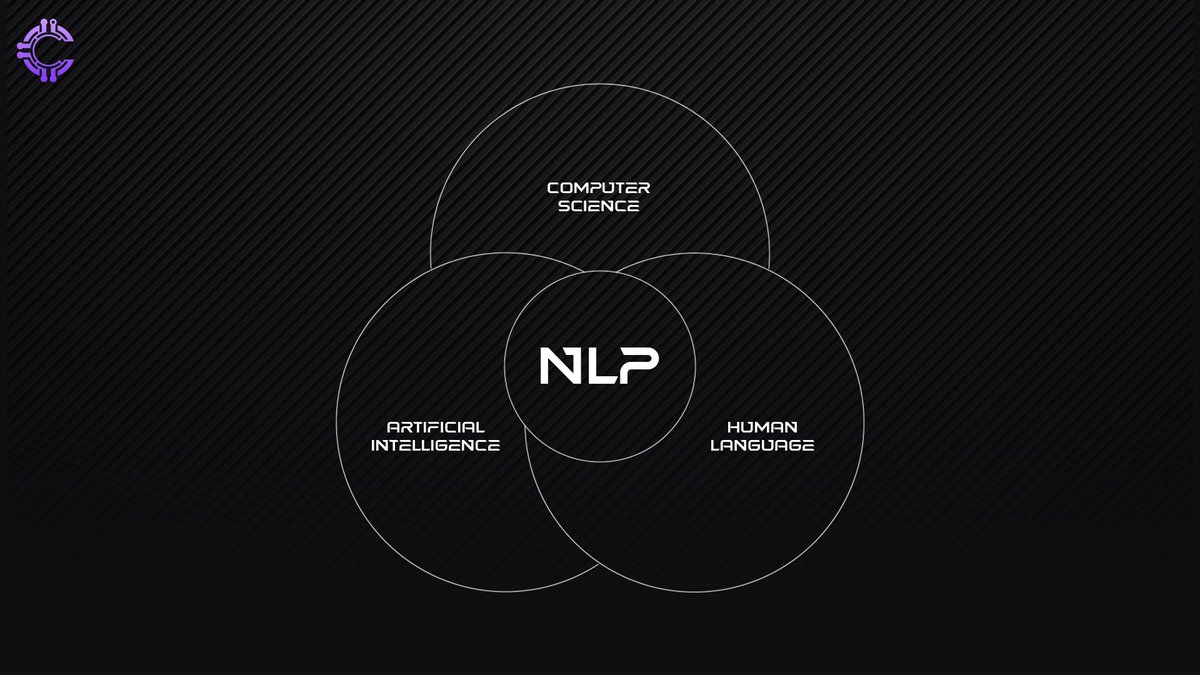

To make these interactions feel natural, personalized AI employs Natural Language Processing (NLP). This technology allows the AI to understand and generate human language.

NLP is a magical mix of Computer science, AI and Linguistics.

How does it works?

1. Tokenization: Breaking down sentences into words or phrases for easier analysis.

2. Sentiment Analysis: Gauging the emotional tone behind your words- because sometimes “I’m fine” isn’t really fine.

3. Topic Modeling: Uncovering hidden thematic structures in large volumes of text data.

With NLP, personalized AI can interpret your intent and respond in a way that feels genuinely engaging. If you express frustration about a service delay, the AI can recognize this sentiment and respond with empathy - perhaps offering an apology or compensation.

For instance, an e-commerce platform might utilize contextual personalization by analyzing a user's browsing history and current session data to recommend products that align with their interests. This approach not only enhances user experience but also increases sales conversions significantly.

Memory and Personalization Mechanisms in AI Companions

Memory and personalization mechanisms in AI companions are essential for creating a more engaging and tailored user experience. These mechanisms enable AI systems to understand users better and adapt to their unique needs over time, fostering deeper connections and enhancing interactions.

Episodic Memory Frameworks

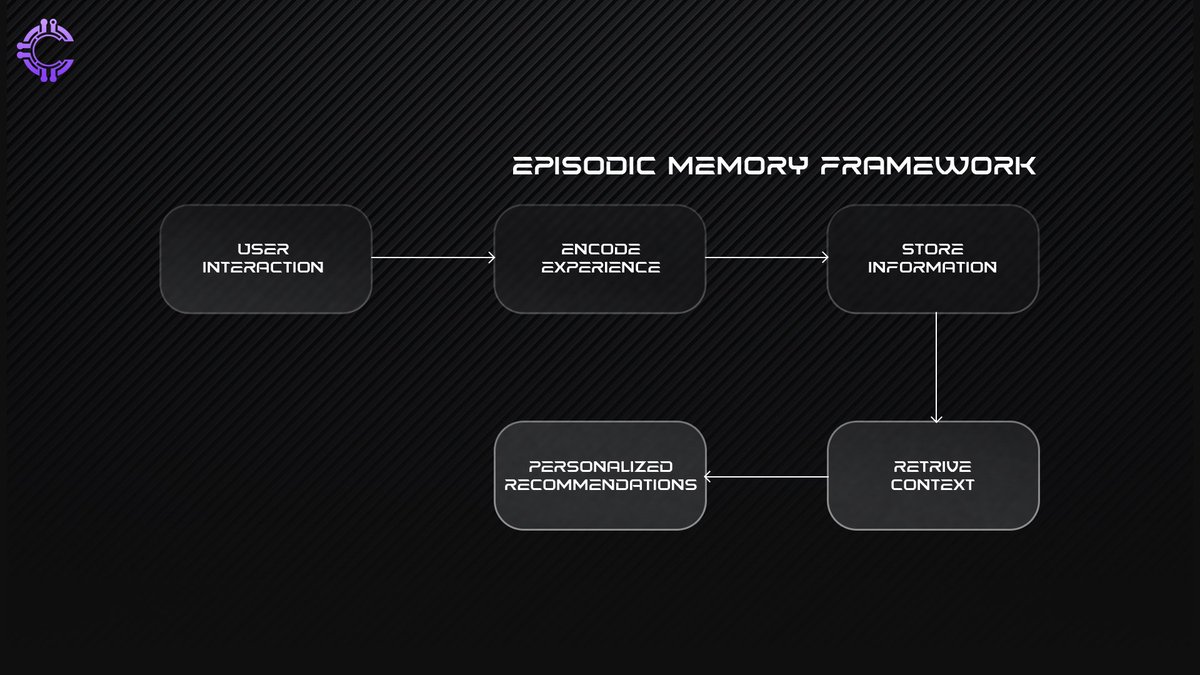

Episodic memory in AI functions much like human memory, allowing the system to store and recall specific experiences tied to particular contexts—like remembering where you went on your last vacation or what you ordered at your favorite restaurant. This framework enables AI companions to:

Encode Experiences: When a user interacts with the AI, it captures relevant details, such as preferences and feedback, creating a rich memory of past interactions.

Store Information: These memories are organized in a way that makes them easy to retrieve later, ensuring that the AI can provide contextually relevant responses in future conversations.

Retrieve Contextual Insights: When faced with a new request, the AI can draw on its episodic memories to recall similar past experiences, enhancing its ability to make personalized recommendations or decisions.

For example, if you frequently ask for travel advice, an AI with episodic memory can remember your previous trips and preferences, making it easier to suggest tailored itineraries.

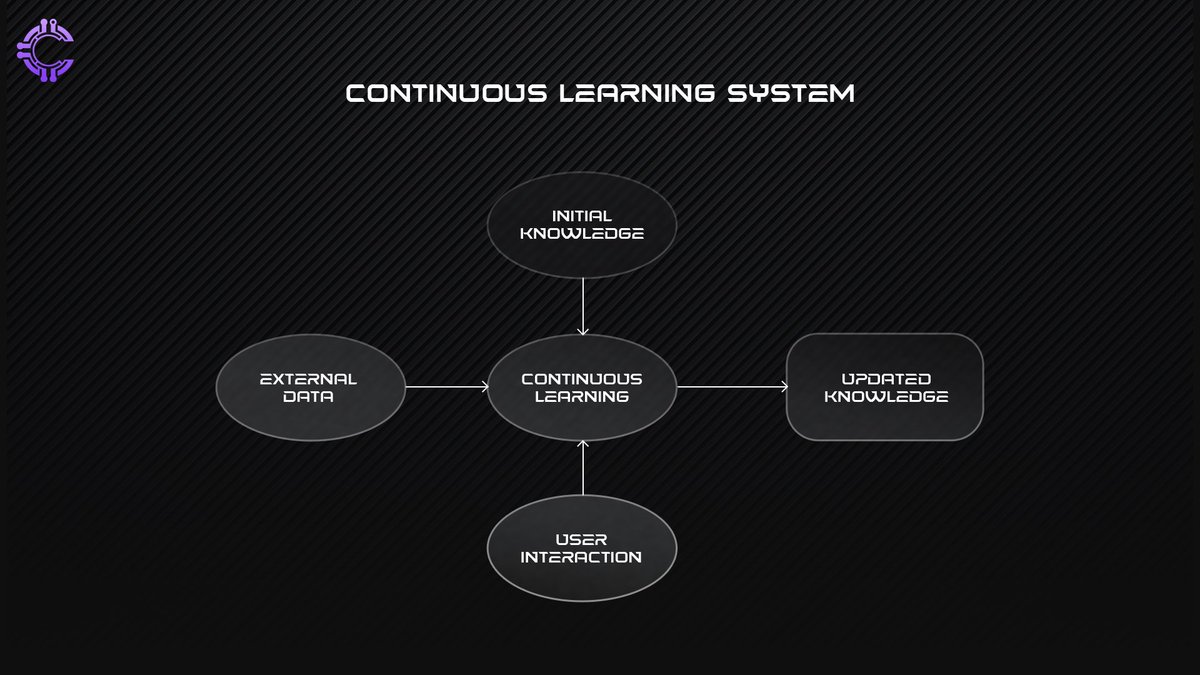

##Continuous Learning Systems##

Continuous learning systems empower AI companions to evolve alongside their users. Instead of being static entities that only learn from a fixed dataset, these systems continuously acquire new knowledge and adapt based on ongoing interactions. As new information becomes available - whether through user interactions or external data sources - the AI can integrate this knowledge seamlessly into its existing framework.

This means that your AI companion not only remembers your past preferences but also adapts as your tastes change or as new options become available.

Personal Knowledge Graphs

Personal knowledge graphs are powerful tools that help organize and visualize the relationships between various pieces of information about a user.

These graphs represent connections between different aspects of a user’s life - preferences, behaviors, interests, and more. They allow AI companions to:

Map Relationships: By visualizing how different elements relate to one another, the AI can better understand the context behind user requests. For instance, if you enjoy hiking and have mentioned specific trails in conversations, the knowledge graph can link those interests to suggest related outdoor activities or gear.

Facilitate Hyper-Personalization: With a comprehensive view of your preferences and behaviors, the AI can deliver highly personalized recommendations that align closely with your interests.

In summary, these memory and personalization mechanisms—episodic memory frameworks, continuous learning systems, and personal knowledge graphs - work together to create a more engaging and responsive experience with AI companions.

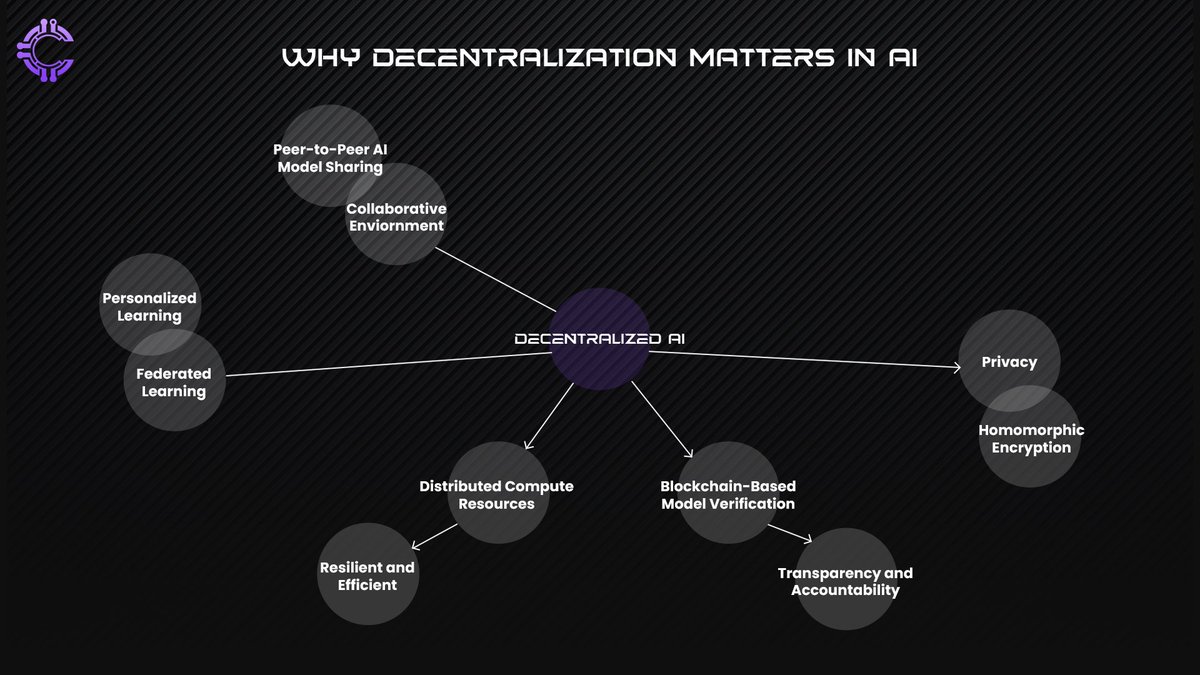

Why does Decentralization matter?

Breaking Free from the Algorithm Cage

Traditional AI is like a one-size-fits-all suit - impressive, but rarely perfect for everyone. Decentralized AI is more like a personal tailor, crafting an experience that fits you perfectly. Whether it's recommending books that genuinely spark your interest or helping you learn in a way that matches your unique cognitive style, personalized AI is about celebrating individual differences.

Privacy: Your Digital Suppower

We've all felt that creepy moment when an ad seems to read our minds. Decentralized AI flips the script. Technologies like homomorphic encryption are like magical invisibility cloaks for your data. Imagine solving complex problems without revealing your personal information—it's data protection meets cutting-edge intelligence!

The Personal Touch: Federated Learning

Remember how your best friend knows exactly how you like your coffee? That's the magic of federated learning. Instead of big tech companies collecting massive amounts of your personal data, this approach lets AI learn from your individual experiences without compromising your privacy.

Peer-to-Peer AI Model Sharing

Imagine a vibrant marketplace where everyone brings their best recipes to share. That’s the essence of peer-to-peer AI model sharing! Instead of relying on a central authority to control and distribute models, this approach allows users to contribute their insights and improvements directly. It fosters a collaborative environment where diverse ideas thrive, leading to richer, more adaptable AI solutions that cater to varied needs. Just like sharing your favorite coffee blend with friends, it enhances the flavor of AI interactions!

Distributed Compute Resources

Think of distributed compute resources as a potluck dinner where everyone brings a dish. In decentralized AI, computing power is spread across multiple nodes rather than concentrated in one location. This setup not only enhances efficiency but also ensures that no single point of failure can disrupt the entire system. When one node is busy or down, others can step in and keep the feast going! This resilience makes decentralized AI robust and reliable, allowing it to adapt seamlessly to user demands.

Blockchain-Based Model Verification

Now, let’s sprinkle in some blockchain magic! Blockchain-based model verification acts like a trusted referee at our potluck dinner, ensuring that every contribution is authentic and accounted for. Each model update and transaction is recorded on an immutable ledger, providing transparency and accountability in the decision-making process. Users can see how their data is used and how models evolve over time, fostering trust in the system. It’s like knowing exactly who brought what dish and how it was made—no surprises here!

An Ideal AI companion

Let’s Imagine an AI that's not just a tool, but a genuine companion—someone who understands you deeply, learns with you, and grows alongside you. We're stepping into an era where AI isn't about processing power, but about creating meaningful connections.

Think of a personal AI like a really smart, incredibly adaptive friend. It needs three core superpowers:

##Consistent Personality:## Always feeling familiar - Predictable contextual responses.

##Memory Magic:## Remembering conversations, preferences, and growth moments for future references.

##Context Radar:## Understanding not just what you say, but what you mean.

##Learning:## More Than Just Data.

Personal AI isn't about collecting mountains of data - it's about understanding you. Imagine an AI that:

- Integrates personal experiences seamlessly

- Adapts to your unique behavioral patterns

- Aligns with your core values and ethical boundaries

- Emotional Variance Algorithms: Introducing controlled randomness to mimic human unpredictability

Privacy

- Homomorphic Encryption: Compute on encrypted data - safe from biases and is correct.

- Federated Learning: Train without raw data exposure across various sources to reduce chances of data breach and centralization, without a single point of failure.

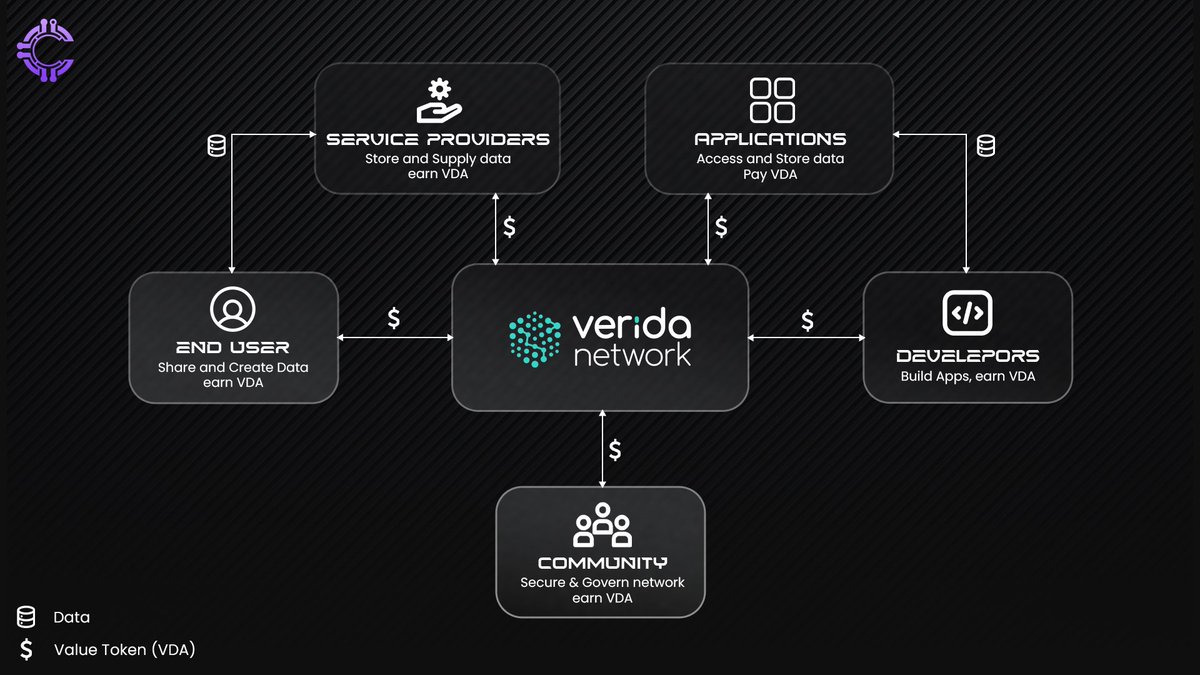

The secret to creating Personalized AI lies in an individual’s private data, essential for training AI models. However, this data is often fragmented across third-party systems, making it difficult to access while maintaining privacy. This is where Verida comes in, with its decentralized infrastructure enabling Private AI. It allows users to access their private data from various platforms to train their own AI models through the Verida Private Data Bridge.

Privacy during computing and data storage is another concern that Verida addresses with its Confidential Data Storage and compute solutions, ensuring user security. Additionally, developers can monetize the stored private data to build AI applications on top of it.

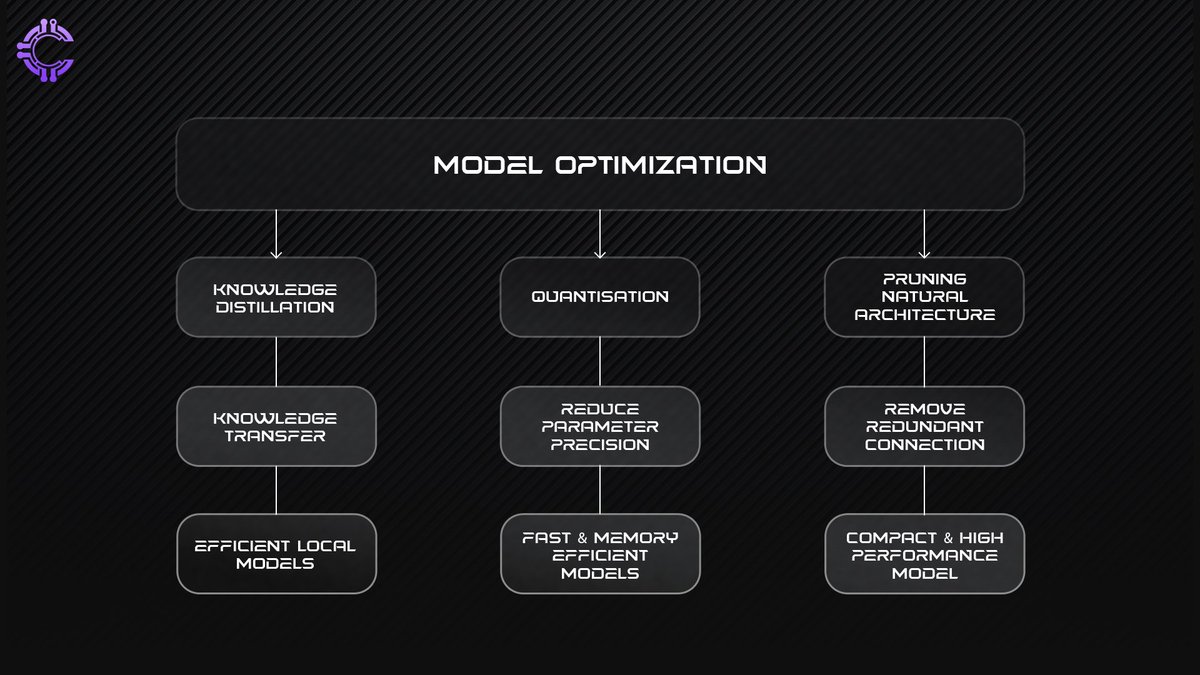

Computation constraint: Size matters (A lot!)

In personalized decentralized AI, computational constraints are critical. Devices at the edge (smartphones, IoT devices) have limited processing power, memory, and energy. Unlike centralized AI systems with vast server farms, decentralized AI must run efficiently on constrained devices. Here’s how model optimization strategies tackle these challenges:

1. Knowledge Distillation: Lean Models Without Losing Smarts

Knowledge distillation transfers knowledge from a large, complex “teacher” model to a smaller “student” model. The student replicates the teacher’s performance but requires less computational power. This enables personal AI agents to operate locally on edge devices while maintaining high-quality interactions, like running efficiently on your phone without relying on servers.

2. Quantization: Shrinking the Numbers

Quantization reduces the precision of model parameters (e.g., from 32-bit floats to 8-bit integers) to save memory and speed up computations. In personalized decentralized AI, quantization ensures models can process user inputs quickly and efficiently without significant quality loss.

3. Pruning Neural Architectures: Cutting the Fat

Pruning removes redundant or less-important connections in neural networks, reducing model size and complexity. This ensures AI agents deliver high performance while conserving resources, enabling personalized recommendations or conversations without overloading devices.

By combining these techniques, personalized decentralized AI systems become efficient and adaptable, empowering privacy-preserving and responsive experiences on constrained hardware.

The Doraemon Roadmap

AI has made remarkable advancements, yet it still has a long way to go before achieving the sophistication and depth of a true personal companion. Let’s delve into the existing gaps that need to be addressed, the emerging solutions that are paving the way forward, and the exciting innovations that lie ahead in this journey toward creating more human-like AI experiences.

Current Challenges

Understanding Context

AI struggles to interpret subtle meanings, handle nuanced language, and synthesize information from multiple sources like text, images, or voice inputs.

Common Sense Reasoning

Cause-and-effect relationships or applying knowledge to unfamiliar scenarios still baffle AI. It’s like trying to teach common sense to a robot - it’s harder than it sounds.

Memory Issues

AI tends to overwrite old information (a problem called “catastrophic forgetting”) and often struggles with retrieving or applying stored knowledge efficiently.

Emerging Technological Solutions

Learning Paradigms

Few-Shot Learning: This is a way for machines to quickly learn and make decisions even with very few examples, like learning a new task with only a few pictures or examples.

Meta-Learning Architectures: Think of this as teaching machines how to learn better and faster. Instead of learning a specific task, they learn how to learn tasks in general.

Causal Inference Frameworks: These are methods that help machines understand cause-and-effect relationships, allowing them to make predictions about how one thing might affect another.

Hybrid Memory Innovations

Neuromorphic Memory Encoding: This is about designing memory systems for computers that mimic how the human brain works, making them more efficient at storing and recalling information.

Dynamic Knowledge Graph Maintenance: This is a system that helps keep track of complex information, continuously updating it as new data comes in, like how our brains keep updating our understanding of the world.

Episodic-Semantic Memory Fusion: This involves combining two types of memory: one that stores specific experiences (episodic memory) and another that stores general knowledge (semantic memory), allowing a more holistic way of storing and recalling information.

With breakthroughs in Quantum Computing and brain-inspired Neuromorphic neural networks, the future of AI is looking pretty exciting. Personal AI assistants like Doraemon, once just a fun fictional character, might soon be hanging out in our homes, ready to make our lives a little smarter and a lot more fun.

Conclusion

As we stand on the brink of a new era in technology, the vision of personalized AI companions - akin to the beloved character Doraemon - becomes increasingly attainable. These digital allies promise not just to assist with daily tasks but also to provide companionship and emotional support, enhancing our lives in ways we once only dreamed of. However, this journey is not without its challenges. Ethical considerations around privacy and security, alongside the potential for over-reliance on technology, must be addressed as we navigate this evolving landscape.

Decentralized technologies, particularly those rooted in Web3, hold the key to unlocking the full potential of personalized AI. By leveraging blockchain and innovative data management techniques, we can create AI companions that are not only customizable but also prioritize user data privacy. As we explore these exciting possibilities, it’s clear that the future of personalized AI is bright - offering us a glimpse into a world where our digital companions truly understand and support us, making life a little easier and a lot more enjoyable.

About Cluster Protocol

Cluster Protocol is the co-ordination layer for AI agents, a carnot engine fueling the AI economy making sure the AI developers are monetized for their AI models and users get an unified seamless experience to build that next AI app/ agent within a virtual disposable environment facilitating the creation of modular, self-evolving AI agents.

Cluster Protocol also supports decentralized datasets and collaborative model training environments, which reduce the barriers to AI development and democratize access to computational resources. We believe in the power of templatization to streamline AI development.

Cluster Protocol offers a wide range of pre-built AI templates, allowing users to quickly create and customize AI solutions for their specific needs. Our intuitive infrastructure empowers users to create AI-powered applications without requiring deep technical expertise.

Cluster Protocol provides the necessary infrastructure for creating intelligent agentic workflows that can autonomously perform actions based on predefined rules and real-time data. Additionally, individuals can leverage our platform to automate their daily tasks, saving time and effort.

🌐 Cluster Protocol’s Official Links: