An analysis of the components of Kubernetes and Cluster Protocol's use of Kubernetes for efficient and decentralized compute sharing.

Jul 6, 2024

12 min Read

##Introduction##

Kubernetes is an open source container orchestration and software management platform which supports the automated deployment, scaling and management of software using unique features like self-deployment and self-healing on its platform.

Now if all of that flew right past your head because you don't know what Kubernetes is, this blog is exactly for you! This article will break down important terms and concepts related to Kubernetes and provide a detailed understanding of the various components and their functionality in the Kubernetes architecture.

Moreover, the article will also discuss how Cluster Protocol is utilizing the Kubernetes platform to develop an efficient and decentralized compute sharing platform.

##Beginnings of Kubernetes##

Kubernetes began as an internal project at Google, leveraging the company's extensive experience with containerized applications and large-scale distributed systems. Google had been running containers in production using an internal system called Borg, which managed the deployment, scheduling, and life cycle of thousands of jobs across its data centers.

Borg's successor, Omega, experimented with different architectural approaches, further refining Google's expertise. In 2014, Google decided to create an open-source project to share this expertise with the broader community, resulting in Kubernetes, named after the Greek word for "helmsman" or "pilot." Development was led by engineers Joe Beda, Brendan Burns, and Craig McLuckie. Google announced Kubernetes in June 2014, releasing it under the Apache 2.0 license, with a design that emphasized extensibility, scalability, and community involvement.

Kubernetes quickly gained traction, attracting contributions from developers and companies like Red Hat, Microsoft, and IBM. In December 2015, the Cloud Native Computing Foundation (CNCF) was established to provide governance and support for Kubernetes and other cloud-native technologies, with Kubernetes as its first hosted project.

The first official release, Kubernetes 1.0, came on July 21, 2015, marking its readiness for production use. Since then, Kubernetes has evolved rapidly, with regular releases enhancing scalability, security, and support for various workloads and environments. Today, Kubernetes is a cornerstone of modern cloud-native infrastructure, supported by a large, active community and managed under the CNCF. With an understanding of Kubernetes background, we can dive into the nitty gritty by understanding how it works:-

##What is Kubernetes?##

In the introduction paragraph, Kubernetes is described as an "open source container orchestration platform". Let us break down this definition using an analogy. Consider the following scenario:

````Analogy- A garden and the gardener````

You have a beautiful garden setup in your background. The garden has many beautiful flowers in it that add to its serenity and vibrant delight. Some of the flower types include tulips, white and red roses, lily flowers, sunflowers, dahlias and even a few lotuses. In order to maintain this garden, you hire a gardener. The gardener has a great understanding of flowers and knows that different flowers have different needs to grow such as watering times, sunlight, and soil nutrition.

So the gardener naturally allocates different amounts of resources to different flowers as needed. He also considers urgent matters like weather conditions and availability of manure. The gardener is essentially acting as an "Orchestrator" between different flowers in the garden.

This is the function of Kubernetes but in the field of software deployment and management. For you to best understand the concept, let us break down the term "container orchestration" word by word.

Container is defined as the grouping of resources with the same qualities. So for example, all the lily flowers will be contained in the same container, and roses in another container of their own.

Orchestration means managing, allocating or distributing something. For example, in a concert the orchestrator manages the band's symphony to properly create music.

Together, "Container Orchestration" in software management can be interpreted as the management, allocation and distribution of containers. And what are these containers in software? Containers are the lightweight, portable, and self-sufficient units of software that encapsulate an application and its dependencies.

Finally, in Kubernetes, container orchestration is the system of automated management, coordination, and scheduling of containers within a cluster. It involves deploying, managing, scaling, networking, and monitoring containerized applications to ensure they run reliably and efficiently across multiple environments.

Today, kubernetes is a common container orchestrator platform utilized by many large organizations and tech startups alike. In its common function, Kubernetes provides efficiency and attention to detail for businesses by its automated deployment, scaling and management of containers within a cluster. Some of its unique features such as efficient resource allocation and secure multi-tenancy play a fundamental role in Cluster Protocol's ecosystem, which is discussed in further detail below in the article.

##How Kubernetes works-##

To begin learning about the different components of Kubernetes, you must first understand clearly that Kubernetes is a distributed system of containers spread across different clusters across a network. Key word is distributed across the network. Now let us understand the different components that are crucial in the operation of this distributed system.

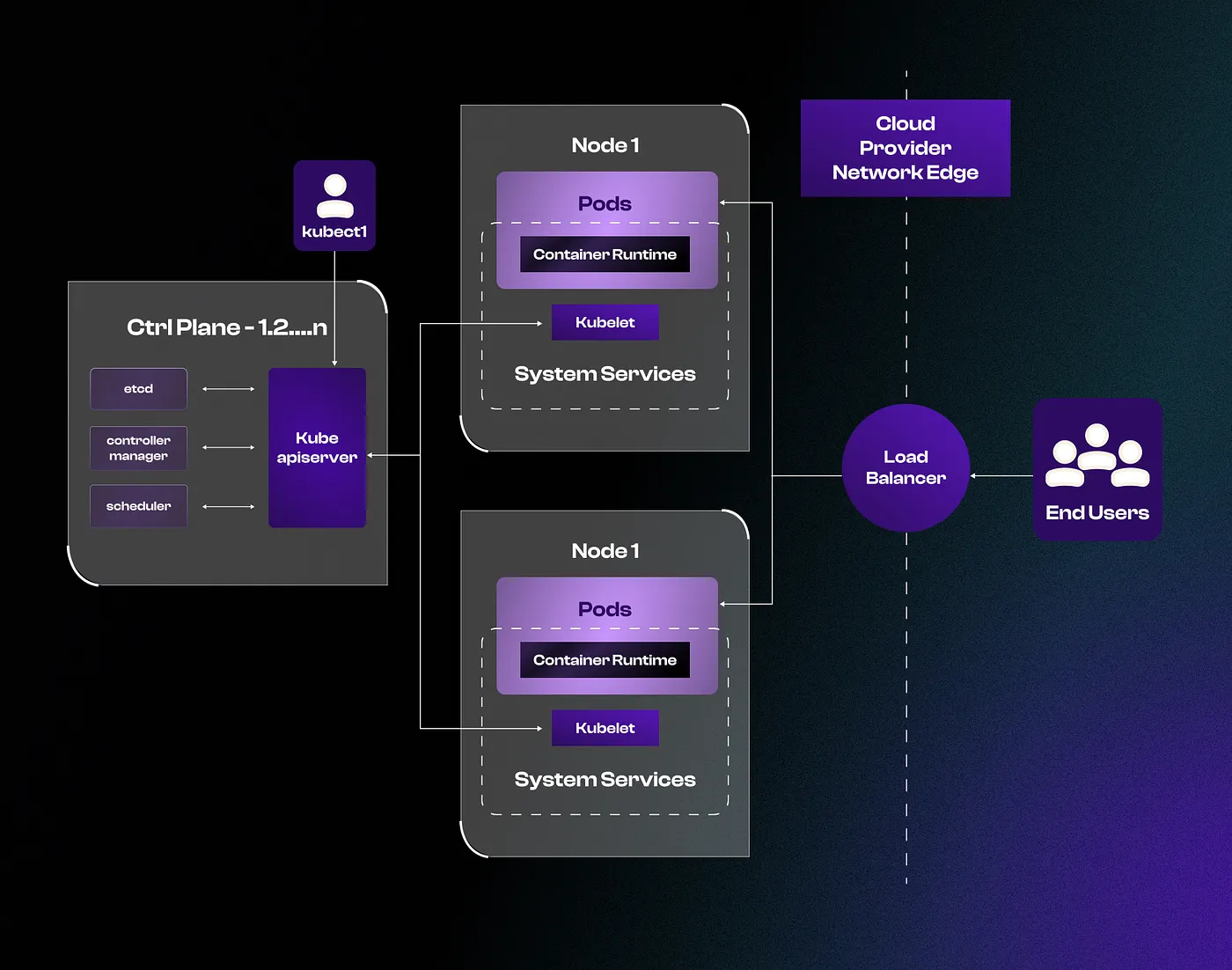

To begin with, a Kubernetes cluster is made up of two main classes- The control plane node and the worker nodes. Let us first understand the control plane node -

Control Plane Components

First, let's take a look at each control plane component and the important concepts behind each component. In the detailed descriptions below, you will gain an education on the fundamental property and use case for each of the components.

1. Kube-API Server

The kube-apiserver is the central hub of the Kubernetes cluster that exposes the Kubernetes API. It is highly scalable and can handle a large number of concurrent requests. End users and other cluster components communicate with the cluster via the API server. Monitoring systems and third-party services may occasionally interact with API servers to communicate with the cluster. When you use kubectl to manage the cluster, you are communicating with the API server through HTTP REST APIs, while internal components like the scheduler and controller communicate using gRPC. Furthermore, note that communication between the API server and other components is secured with TLS to prevent unauthorized access.

The Kube-Apiserver is responsible for API management, handling all API requests, supporting multiple API versions simultaneously, and managing authentication and authorization using various methods. It processes API requests, validates data for API objects like pods and services, and is the sole component communicating with etcd, about which we discuss next. The API server also performs the role of coordinating processes between the control plane and worker node components, including an apiserver proxy for accessing ClusterIP services from outside the cluster, and contains an aggregation layer for extending the Kubernetes API with custom resources and controllers. Lastly, the apiserver plays a crucial role in watching resources for changes, allowing clients to receive real-time notifications for resource modifications.

2. Etcd

Kubernetes's inherently distributed nature requires an efficient distributed database. Etcd acts as both a backend service discovery and a database, essentially serving as the brain of the Kubernetes cluster. Etcd is an open-source, strongly consistent, distributed key-value store, designed to run on multiple nodes without sacrificing consistency. It uses the Raft consensus algorithm for strong consistency and availability.

Etcd stores all configurations, states, and metadata of Kubernetes objects and allows clients to subscribe to events using the Watch() API, which the kube-apiserver uses to track object state changes. Etcd exposes a key-value API using gRPC, making it an ideal database for Kubernetes, and it is the only stateful component in the control plane. Etcd's integration with Api server and other components like the scheduler is crucial in proper ecosystem function. Let us discuss Kube-scheduler next.

3. Kube-Scheduler

The kube-scheduler is responsible for scheduling Kubernetes pods on worker nodes. It identifies the best node for a pod based on specified requirements such as CPU, memory, affinity, taints, and tolerations. The scheduler uses filtering to find suitable nodes and scoring to rank them, selecting the highest-ranked node for pod scheduling. The scheduler listens to pod creation events in the API server and goes through a scheduling cycle to select a worker node and a binding cycle to apply the change to the cluster. It prioritizes high-priority pods and can evict or move pods if necessary. Custom schedulers can be created and run alongside the native scheduler, and the scheduler has a pluggable framework for adding custom plugins.

4. Kube Controller Manager

The kube-controller-manager manages all Kubernetes controllers, which run continuous control loops to maintain the desired state of objects in the cluster. Various functions and protocols ensure that the Kube ecosystem is rigid against major faults and always in their ideal condition, known as the desired state. Controllers like the deployment controller, replica set controller, and others ensure that Kubernetes resources are in their desired state. For example, if a user updates a deployment to have more replicas, the deployment controller adjusts to match the desired state. The kube-controller-manager coordinates these controllers and supports custom controllers associated with custom resource definitions.

5. Cloud Controller Manager (CCM)

The cloud controller manager integrates Kubernetes with cloud platform APIs, allowing core Kubernetes components to operate independently while enabling cloud providers to integrate with Kubernetes using plugins. The CCM contains cloud-specific controllers that manage cloud resources such as instances, load balancers, and storage volumes. The node controller updates node-related information by interacting with the cloud provider API, the route controller configures networking routes for inter-node communication, and the service controller deploys load balancers and assigns IP addresses. Examples of CCM use include provisioning load balancers for Kubernetes services and storage volumes for pods. Overall, the CCM manages the lifecycle of cloud-specific resources used by Kubernetes.

Now let us understand Worker node -

Worker Node Components

1. Kubelet

Kubelet is an agent that runs on every node in the Kubernetes cluster, operating as a daemon managed by a system rather than a container. It is important to note that Kubelets do not function as containers. Moving on, it registers worker nodes with the API server and processes pod specifications (podSpecs) primarily from the API server. A podSpec defines the containers to run inside the pod, their resources (e.g., CPU) and settings like environment variables, volumes, and labels. Kubelet ensures the podSpec is brought to the desired state by creating the necessary containers. It handles the creation, modification, and deletion of containers, manages liveliness, readiness, and startup probes, mounts volumes based on pod configurations, and collects and reports node and pod statuses via calls to the API server using implementations like cAdvisor and CRI. Kubelet can also accept podSpecs from files, HTTP endpoints, and HTTP servers, with static pods being a notable example, where pods are controlled directly by Kubelet and not by the API server.

The main points about Kubelet include its use of the CRI (Container Runtime Interface) gRPC interface to communicate with the container runtime, its exposure of an HTTP endpoint for log streaming and exec sessions, its use of the CSI (Container Storage Interface) gRPC to configure block volumes, and its use of the CNI plugin for allocating pod IP addresses and setting up network routes and firewall rules.

2. Kube-Proxy

Kube-proxy is a daemon that runs on every node as a daemonset, implementing the Kubernetes Services concept for pods by acting as a network proxy. It proxies UDP, TCP, and SCTP traffic, but does not handle HTTP. When a service is created in Kubernetes, it gets a virtual IP (ClusterIP), accessible only within the cluster. Kube-proxy creates network rules to route traffic to backend pods grouped under the service object, handling load balancing and service discovery.

Kube-proxy communicates with the API server to get details about services and their respective pod IPs and ports (endpoints) and monitors changes. It can operate in various modes for creating and updating routing rules:

IPTables: The default mode which uses IPtable rules to forward traffic to backend pods, selecting pods at random for load balancing.

IPVS: Suitable for large clusters, supporting multiple load-balancing algorithms (e.g., round-robin, least connection).

Userspace: A legacy mode that is not recommended.

Kernel Space: Specifically for Windows systems.

Additionally, Kubernetes 1.29 introduces a new nftables-based backend for kube-proxy, designed to be simpler and more efficient than IPtables. Kube-proxy can be replaced with Cilium for advanced networking capabilities.

3. Container Runtime

A container runtime is a software component required to run containers, similar to how Java Runtime (JRE) is needed to run Java programs. It runs on all nodes in the Kubernetes cluster and is responsible for pulling images from container registries, running containers, allocating and isolating resources, and managing the lifecycle of containers. The Container Runtime Interface (CRI) is a set of APIs that allow Kubernetes to interact with different container runtimes interchangeably, defining APIs for managing containers and images. The Open Container Initiative (OCI) sets standards for container formats and runtimes.

Kubernetes supports multiple container runtimes like CRI-O, Docker Engine, and container, all of which implement the CRI interface and expose gRPC CRI APIs. The kubelet interacts with the container runtime using these CRI APIs to manage container lifecycles and provide container information to the control plane. For instance, with the CRI-O container runtime, when a pod request is received from the API server, the kubelet communicates with the CRI-O daemon to launch containers. CRI-O pulls the necessary container image from the registry, generates an OCI runtime specification, and uses an OCI-compatible runtime (like run) to start the container as per the specification.

After understanding the intricacies of control plane nodes and worker nodes, a brief analysis of how Cluster Protocol plans to share compute resources in a decentralized manner is provided below.

##Leveraging Kubernetes for Cluster Protocol's automated AI Compute Ecosystem##

Automated Deployment and Scaling

Kubernetes dynamically adjusts resources to meet fluctuating AI workload demands. Kubernetes offers the following features -

Horizontal scaling- Kubernetes woll scale applications up and down automatically based on GPU utilization or other metrics, ensuring optimal performance and resource usage. Such a feature allows for optimal distribution of resources amongst a pool of participants.

Automated scheduling- Kubernetes automatically schedules containers based on resource requirements and constraints, optimizing the use of resources available in the cluster.

Efficient Resource Allocation

Optimizes resource utilization for cost-effective AI processing.

Load Balancing for Responsiveness

Ensures consistent performance and responsiveness of AI applications under varying loads. Kubernetes makes this possible using the following features -

Service Discovery- Kubernetes can expose containers using DNS names or their own IP addresses. If traffic to a container is high, Kubernetes can load balance and distribute the network traffic so that the deployment is stable.

Comprehensive Monitoring and Logging

Provides insights for performance optimization and troubleshooting of AI services. To enhance internetwork communication, Kubernetes offers -

Network Policies- Kubernetes provides network policies that allow you to control the communication between pods, enhancing security within the cluster.

Streamlined Updates with CI/CD

Enables rapid and reliable deployment of new AI models and services.

Secure Multi-Tenancy

Isolates resources and data for different users and projects within the Cluster Protocol ecosystem.

This strategic integration of Kubernetes into the Cluster Protocol empowers AI developers and users with a robust, scalable, and automated platform to efficiently build, deploy, and manage their AI applications within a decentralized compute environment.

##Conclusion##

The potential for Kubernetes technology in the times of an AI revolution is unprecedented. By allowing organizations to effectively orchestrate their containers with minimum effort, Kubernetes has shown incredible utility and has been adopted by most major corporations including Amazon, Microsoft, Nvidia and Apple.

But you may wonder what is the potential of Kubernetes in decentralized compute sharing platforms like Cluster Protocol. Kubernetes's main potential is in its ability to orchestrate complex resources while still pertaining to the values of decentralization. By orchestrating and managing distributed containers, Kubernetes can efficiently allocate and scale compute resources across a decentralized network, ensuring optimal performance and resource utilization.

This capability enables the seamless sharing and pooling of computational power among diverse nodes, fostering a more resilient and efficient decentralized infrastructure that aligns with the principles of Web3 and decentralization.

🌐 Cluster Protocol's Official Links: